Changes to Robots – How the New Framework Addresses Autonomous Systems – Part 1

17 Jul 2025

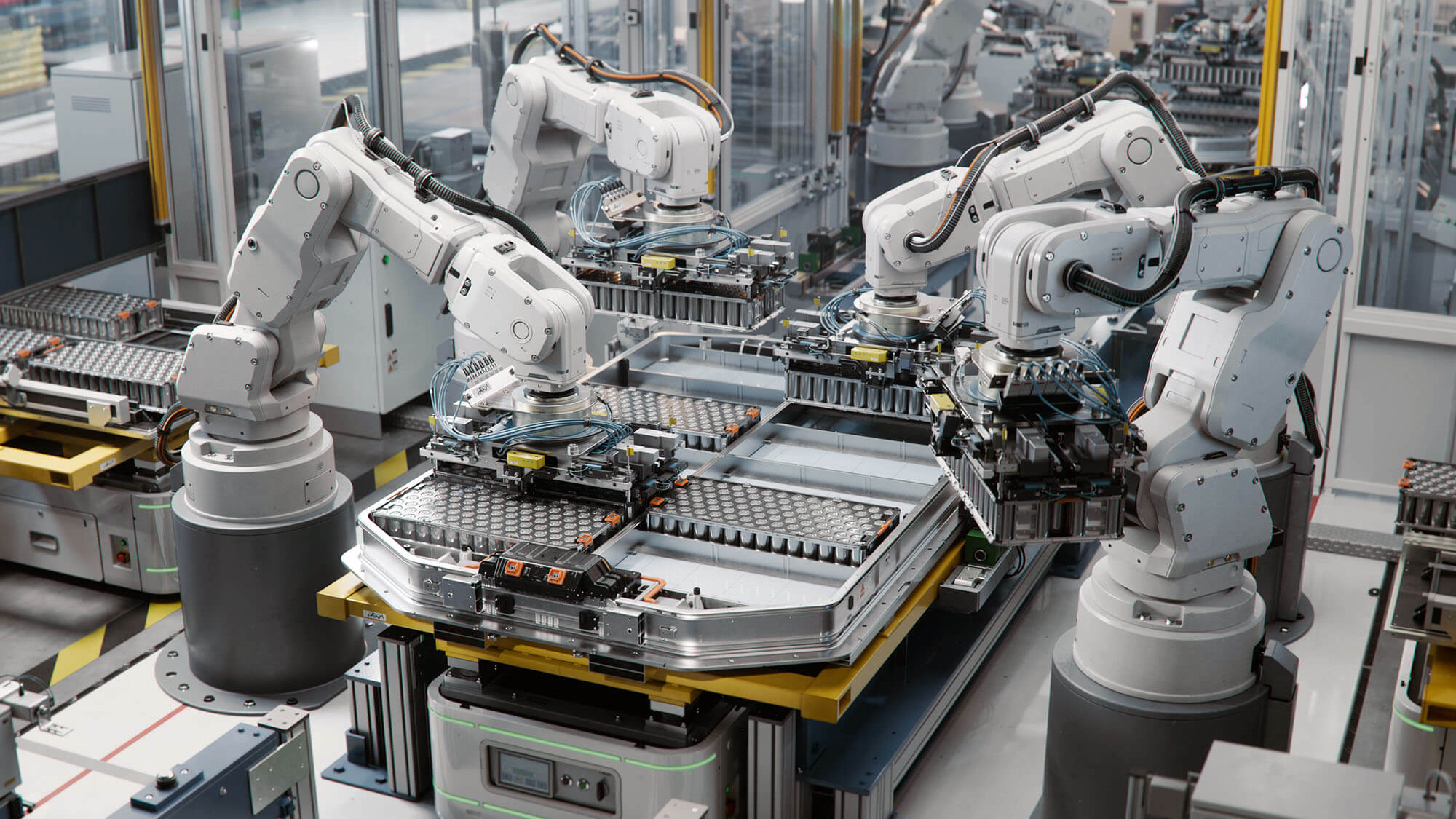

Bridging the gap between traditional mechanical design and modern machine behaviour

This blog is the first in a series exploring how the EU's new regulatory landscape is reshaping the way we approach robotics and autonomous systems – particularly in the context of the shift from Industry 4.0 to Industry 5.0.

The arrival of the new EU Machinery Regulation marks a long-awaited shift in how we think about machines, especially those that act with a level of independence. For years, we’ve seen robots becoming more adaptive, connected, and intelligent. What was missing was a framework that acknowledged this evolution in a meaningful, enforceable way. Now, we have it.

What strikes me most is how the regulation begins to bridge the gap between traditional mechanical design and modern machine behaviour. It doesn’t treat robots like just another type of machine. Instead, it starts to account for how autonomy and learning introduce new types of risks, ones that cannot be fully captured by hardware checks or deterministic logic.

From my perspective, one of the most significant changes is the recognition that certain AI-features or adaptive systems is now considered “high-risk,” particularly if their behaviour influences safety. That means the days of self-declared CE marking for these machines or components are over. Manufacturers now have to involve EU Notified Bodies and rethink how they validate functionality when outcomes may evolve over time.

This raises some real challenges. How do we prove safety for systems that change? How do we define the limits of machine learning safely? Many of the traditional functional safety standards such as ISO 13849 or IEC 62061 are not built for models that adjust themselves based on live data. And even if we treat the AI as a component, we still need robust methods for ensuring its behaviour remains within safe bounds.

Cybersecurity is another area where I see tension emerging. The regulation now considers digital threats as direct safety risks. If a robot’s logic can be altered through a network or an unsecured update, that’s a real hazard, not just an IT concern. This forces manufacturers to tighten how they handle software updates, remote access, and even data logging. In short, safety isn’t just about sensors and stops anymore, it is become about digital integrity.

There is also a broader systems issue. With more machines becoming software-defined, the responsibility for compliance no longer sits only with the original equipment manufacturer. If you are integrating third-party AI system or components, modifying the robot after delivery, or even developing custom safety logic. You are now be treated as the manufacturer in the eyes of the regulation. That’s a big change, and one I think will catch some operators off guard.

In terms of alignment, it is clear this regulation doesn’t stand alone. The AI Act complements it, and we’ll need to keep an eye on the development of harmonized standards that will make compliance more tangible. But even now, the direction is clear, autonomy must be accountable.

These changes also raise new challenges for those of us working in compliance and safety roles. Assessing intelligent systems that evolve over time, or ensuring digital safety across a machine’s lifecycle, requires new tools, new thinking, and in some cases, entirely new competencies. I’ll explore that more in the next post.

Ultimately, this regulation pushes us as engineers, designers, and developers to think more critically about the systems we’re building. We’re not just making smarter machines, we are reshaping how they interact with the world, and that comes with new responsibilities.